Why not subscribe now?

Useful Link(s)...

Hosting by

Bluehost

![]()

Jerry Pournelle jerryp@jerrypournelle.com

www.jerrypournelle.com

Copyright 2009 Jerry E. Pournelle, Ph.D.

The Black Screen of Death Story

This began with a letter from a reader:

Thought I'd copy and send this to you FYI.

http://www.msnbc.msn.com/id/34223754/ns/technology_and_science-security/

The story led to an MSNBC article that cited a British company:

British security firm Prevx writes about the problem on its blog, and suggests following this procedure: ...

The recommended procedure included going to the Prevx site and downloading a fix for the "black screen of death", which was said to be common among Windows (Windoze?) users. Other technical site publishers including IDG picked up the story, and soon the Internet was abuzz with Black Screen of Death Stories, even though none of the stories I saw were first person; they were all accounts of what happened to someone else.

Eventually the story and its coverage sparked considerable discussion about the quality of technical journalism in the Internet Age.

I ran this in my journal with comments including warnings against the "remedy" from Peter Glaskowsky and security expert Rick Hellewell. My advice was to wait for some warning from Microsoft before acting. I also pointed out that I had never heard of this problem, and knew no one who had it; had any readers actually seen it? I never got any reports of the problem; but I did get considerable mail on computer journalism, and the discussion was illuminating. It began with an explication from Ed Bott excoriating the computer press.

I invited comments among my advisors. First was Peter Glaskowsky

Coming from the Microprocessor Report tradition, where we thought it was better to say nothing than to say something that might even POSSIBLY be wrong, I am very sensitive to the risks of this new style of journalism.

But I know it's something we'll adapt to. When I saw those headlines, I didn't assume there actually WERE millions of victims of a faulty Microsoft patch. I just assumed someone was claiming this was true.

When I read the story, it was plain that there was no factual support for the claim, so I pretty much discounted it.

People-- all people-- will eventually understand the difference between this kind of impulsive story and the referenced, fact-checked articles that many outlets still produce.

I think it's also reasonable to start thinking about civil and criminal sanctions against people who negligently publish false, inflammatory, or defamatory content. Microsoft wasn't badly harmed here, but I think it has a tort against Prevx and in a more extreme case it might have had a tort against IDG as well.

Personally, I ran the Speeds and Feeds blog on the Microprocessor Report model. I just followed my training: I just didn't publish anything I wasn't sure about. I still made mistakes, but only a few in the 2.5 years of the blog, and it was quick and easy to publish corrections.

If I'd been the one contacted by Prevx to get this story onto CNET, I'd have insisted on checking at least the obvious sources of supporting evidence, and assuming I couldn't find any, I would have positioned the company's statements as uncorroborated claims:

"In a blog posting today, security firm Prevx claims that flaws in recent Microsoft patches for Windows 7, Vista, and XP expose millions of users to the risk of a 'black screen of death' in which the usual Windows user interface fails to appear after rebooting, making the system useless. Prevx says it has received numerous reports of this problem, but my search of Microsoft discussion forums failed to turn up similar reports."

I simply wouldn't have published the piece based solely on Prevx's claims.

On the other hand, there was at least one story where I did publish incomplete information as the story developed; I just made it clear that I was giving all the information I had, and I refrained from leaping to conclusions.

http://news.cnet.com/8301-13512_3-10153939-23.html

Other sites published stories on the same subject at roughly the same time that leaped to just about every conclusion possible. Some of those stories are still online and still contain errors. Some of them were thoroughly rewritten to remove the errors, making it look like they were produced responsibly to begin with. All I ever had to do was add a few more facts, because I never said anything in the first place that needed to be changed.

. png

Which is pretty close to my view. I also invited comparison to ClimateGate and what happened to Larry Summers when he was President of Harvard.

Alex replied:

Climategate:

While we all (mostly) disagree that "information wants to be free", "scientific data should be available" is a reasonable corollary, right? Also, the models need to be dragged into the open. Sunlight important in this case.

Re: Black screen o' death:

Information needs to be kept in context: One company (of somewhat doubtful provenance) asserts that Microsoft is the Big Bad. Many (not all) run with it, many don't even bother asking MS for a reaction. This is the worst sort of yellow journalism.

Secondary effects of BSo'D scandal: PNG's observation to consider the source, certainly apropos when the barriers to entry as a journalist are so low.

But: What's Aunt Millie to do? Not install updates? Weigh multiple sources? Disconnect the computer and chase geese for their quills? Not care?

Which are good questions. My advice to Aunt Minnie is "Don't Panic", and when her nerdy nephew suggests reformatting the hard disk, to ask "What does Microsoft say we ought to do?" And Eric Pobirs added

I don't think all that much has changed since the turn of the 20th Century. It's just gotten faster. Big newspapers used to have multiple daily editions and smaller papers would stake out different times of the day to catch readers looking for information a few hours more recent than the other guys. This diminished as radio and then TV took ownership of time-sensitive reporting. Nowadays, a breaking story is a tweet from a cell phone.

People aren't getting any stupider or smarter. A story that appears on a thousands web sites within an hour of the first report is gathering just as many suckers and skeptics as classics like the War of the Worlds broadcast or 'Dewey Wins' or Dan Rather blatantly trying to turn a presidential election with badly forged documents. When I was looking at comments on the Prevx site, there were some people saying they'd had this problem for months or years! Unless Microsoft is now testing a time travel update mechanism (XP SP3 is now a 2001 release) it should have occurred to these people that there was a problem with a Microsoft patch released only weeks ago being to blame.

Every year the BBC runs an April Fool hoax ( link ) that bags an ample number of believers. Without fail in every election season a candidate will have a statement attributed to them that was actually uttered by a comic on some venue like Saturday Night Live and it will never die, even among the candidate's supporters.

If the entire population read the news with a discerning eye, electoral politics in this country would look very different. Nor do I believe most newspapers come even close to the journalistic standards they claim. A distinct tilt all too often creeps off the editorial and opinion pages into the rest on the paper. A publication with a honestly proclaimed agenda need make no apologies for how they interpret a set of facts. The primary concern is that the facts be facts rather than beliefs.

-- Eric

Of course Eric's last sentence is the important one, and also the most difficult to enforce, particularly in these days of competition to be the first to break a story and get an enormous Internet flash crowd. Internet fame is usually fleeting, but sometimes being first with a big story can have big positive effects on a site's permanent readership, while overstating the case doesn't usually get such large negative results. We're all trying to build readership, and the Big Story is tempting to those who do breaking news coverage. It's not a problem for me: BYTE used to come out at least a month after I wrote my column, so I got in the habit of looking for stories that would still be interesting in a couple of weeks; it was pointless to look for the daily flash news. I've kept that habit and I'm glad of it.

The bottom line to me is that the technical press is often just as tempted to run stories without authentication as the old Hearst papers ("You provide the story, and I'll provide the war") ever were. Be careful about swallowing breaking stories, and "Don't Panic" is still good advice.

The Sweet Spot Computer, Chapter 3,

Jerry

Something you said last month - "I again caution you: the chip coolers that come with Intel CPU's are generally not adequate, and you're better off with any of several third party chip cooling systems." - made me wonder, is that still true? So I set out to find out.

Currently I'm using a Freezer Pro 7 CPU heatsink/cooler. It has a 92mm fan, and it's not too tall or heavy - only four inches wide, five inches tall and 520 grams; just over a pound. It came with its own thermal paste pre-applied. Back when I had ordered this but before receiving it I stopped in at Radio Shack and bought some silver-containing thermal paste for six dollars. Later I had cause to re-examine it. It is repackaged Arctic Silver 5, which is tied with a few others for top thermal paste (benchmark article link) . Arctic Silver 5 takes 200 hours to burn in, so it is an impractical choice for testing CPU coolers in my basement. I bought some Gelid Solutions GC3 Extreme a while ago. It is tied with Arctic Silver 5 but does not require a burn-in, so I used that.

The ambient temperature of my basement ranged from 19 to 20C, so I was in an advantaged environment. The rest of the temps I collected via software.

1. Motherboard temp: motherboards take their own temperature. Throughout the test runs the motherboard temp (Temp1) was 27C at idle.

2. CPU temps: Core i7 chips report two kinds of temps. The first (Temp3) is measured at the geometric center of the heat spreader - that's the metal top that covers the silicon CPU. Intel gives a "Thermal Design Power" of 72.7C, which is measured by this CPU temp.

3. The third temperature I care about is the core temp. Core temp relates to a temp called Tj Max. This is not a place you buy clothes, but the temp at which the chip will start shutting down. This chip measures the temperature of each core, and reports a number that represents the "distance" to Tj Max. Intel has hinted that the measure may be non-linear, and is not accurate more than 50C from Tj Max. Since the Tj Max for the i7 860 and the i5 750 is 99C, temps under 50C are not reliable. That means I need both. Actually, I needed both, as you shall see.

I brought Sweetie downstairs and set her up. I tested her with the Freezer Pro as I had installed it. The I tested the Intel cooler that came with the CPU. Twice, actually. Then I put back the Freezer 7 Pro and tested it again. The key difference is that after the first test, all subsequent tests were with the GC Extreme thermal paste. I wanted to see if a good thermal paste - comparable to one available for six bucks at Radio Shack - would give good enough results that you could avoid investing in a separate cooler. I tested Sweetie at idle (running 1200MHz or so) and under full load using the LinX front end for Lin Pack, the utility Intel uses to test their chips. On my machine, LinX runs about seven and a half minutes for 20 repetitions.

With stock thermal paste, the Freezer Pro 7 at idle gave a CPU temp of 23C and a core temp of 34C. Under 100% load, the System temp rose from 27C to 28C by the end of the test; the CPU temp hit 55C and the core temp ran at 65C.

I replaced the aftermarket cooler with the stock cooler. Following theory, I used just enough paste to form a very thin layer. This was hard to do, since the paste is full of aluminum nanoparticles and really wants to be a block of aluminum alloy when it grows up. The CPU temp at idle was 33C. The core temp ranged up to 46C. Under full load the core temp shot up to 95C before I could stop it. The CPU temp under load was 83C, still rising when I killed the test. And the system temp had jumped from 27 to 30C in just a few seconds.

I pulled the stock cooler, cleaned it off with Arctic Clean 1 and 2, and put on a thicker coat of thermal paste. To heck with theory! This time the idle CPU temp ran 22-23C, with a core temp in the mid-30's maxing out at 36C. So far, not much different from the Freezer Pro. Under Load, though, the system temp rose to 31C by the end of the test. The CPU temp was at 72C with a few excursions to 73C. The core temp stabilized at 83C. Given the results I got with the Freezer Pro and the GC Extreme paste, I suspect that the results I had with the GC3 paste and the stock cooler represent about what you'd get if you simply used the patch of paste that came pre-installed on the cooler. I don't believe it is any coincidence that Intel's Lin Pack produced a CPU temp that hit the Thermal Design Power of this chip dead on when it used the Intel cooler. So the Intel cooler is adequate - just. Actually, in a hot room you might have troubles.

I pulled the Intel cooler off and cleaned the CPU again. I carefully applied thermal paste to the Freezer Pro 7 and put it on the chip. Hmm. Too much. Removed the excess. Unlike the Intel cooler, you can rotate the Freezer Pro and make sure you have good coverage with the paste. The CPU temp was 21C, with the core temp running at 32C on idle. Under load, the system temp crept up to 28C at the end of the test. The CPU temp was 54C and the core temp was 64, with excursions to 65C. If you consider that the ambient temp had fallen to 19C, the numbers I have with the super duper thermal paste was essentially the same as I had with the pre-applied paste. YMMV, but I think that pre-applied thermal pastes are good enough, unless you want to develop a new skill.

So, you were right: the stock cooler is a waste. It turns out that the $35US Freezer Pro 7 is a pretty good cooler. Certainly the folks at Arctic Cooling have not been able to build a better one - and they've tried. This cooler is all I need for this machine, which should not be overclocked.

Why not? It turns out you can burn out a motherboard by pushing it too hard. I had a preview of that when the system temp shot up to 30C. The MOSFET's on my board are not cooled, so if I tried to do any work while overclocking, the board might fry.

So why does the manufacturer encourage us to OC? Heck, I just hit a big red button, restart and I'm at 3800MHz. A tweak or two and I'm at 4200MHz - a 50% overclock. Of course, I'm not foolish enough to do more than record the event and get out, but it's way too easy to get into trouble. And then in the BIOS, you have a really easy way to disable VDROOP, which can injure your board and/or your CPU.

So why do the makers put in these options? To sell motherboards, of course. They're not my friends; they want to sell me a motherboard. And of course, all of the MB makers do it.

But I've been bitten by the OC bug. It's a lot like hot-rodding, but cheaper. I have a new case, a new cooler (a Megahalems) and plenty of fans to swap in and out. I'm just waiting for a certain motherboard - an overclockable motherboard - to reach these shores. It hits my own personal sweet spot.

Oh yes, the newest wrinkle in power supplies: single rails. "Rails" in a PSU are circuits that supply power. My new Antec 750W PSU has four 12-volt rails, for example. When people put too many power-gobbling video cards and other things on a single rail rather than spread them out, they can damage or destroy their PSU's. There are now PSU's with single 12V rails that make load-balancing unnecessary. They are still a bit pricey, but it's coming.

Ed

Thanks. Another confirming instance of my conclusion that if you're building an Intel system, you'll probably want a better chip cooler than Intel supplies.

As I have said before, I never recommend overclocking; there's really no need. Anything you do that needs more computing power than your system usually supplies is generally not something you should be doing on that equipment.

I'm getting the urge to build some new systems again.

SRWare Iron and Threatfire

Dr. Pournelle

After reading your Chaos Review Letters I too am extremely frustrated with Firefox (3.5). I thought that it might be because my computer is old. It is a Pentium 4 single core processor 2.8 GHz running at 3.2GHz with 2GB of ram on win XP (I know practically a dinosaur) But I built this unit 4 years with the best components and it just keeps going strong. Firefox used to work fine, as matter of fact it used to be downright snappy. But now it is like a jet engine that keeps sucking in large flocks of geese. Startup time alone is about 10 seconds. Sometimes I open a tab and the program seems to freeze for 5 to 15 seconds and then it starts loading up the page. I was beginning to think that this old processor couldn't hack it any more, Firefox must be multi-threaded, but why should it be? I do have a 5Mb cable connection.

So I thought I would try your reader advice and give SRWare Iron a try, I am glad I did. I imported all my bookmarks from Firefox and Bob's your uncle welcome back snappy pages. No more irritating delays or freezes, though I do not have the Firefox tools like Adblock or Go to Google I can live with that for now but not the annoying and irritating delays of Firefox. You can get it here: SRWare site.

I also had Kaspersky antivirus software that expired so I took your advice and I am now running Microsoft Essentials for free but I also found another program that runs in conjunction with virus software called Threatfire it is supposed to be able to protect you from zero-day exploits and other threats like key-loggers, rootkits, malware, spy-ware, ect. I checked it out and downloaded it, started it up, and on its initial scan it found 3 minor worms on my hard drives. I have been using it since (about 3 weeks) with no problems and it even updates itself automagically. It can be found here:

Thanks for all your help and insight.

Michael G. Scoggins

Thanks. I know little of Threatfire, but I don't think I have much need for that kind of program. The best security still consists of good habits: don't go to dangerous web sites, don't allow questionable programs to run on your machine, and don't open attachments to email unless you are certain the originator is genuine and smart enough not to send you something dangerous. Given those I'm content to let Microsoft Security Essentials do the rest - but I do a weekly external scan on any machine I've sent to places that I don't really trust. Generally I use the on-line scan from ESET, (Google "NOD 32" and look for on-line scanner) but Norton has a decent one also. The very fact that I can get to external scanners is comforting, by the way: many malware programs disable access, and when that happens you know you have a problem.

I still use Firefox for most of my Internet work. With a good machine (multi-core and lots of memory) you can keep a lot of Firefox tabs open, and Firefox is still better than Internet Explorer if, as I do, you use open tabs as a sort of "To Do" list. Firefox will sometimes drive me nuts, as when "Colorful Tabs" wants to update just as I have gone looking for an article on medieval warfare or some such, but I still put up with it. The "Go to Google" and "Go To Bing" add-ons work just fine, and I haven't seen anything as useful in IE. I use IE when dealing with Microsoft, or when doing an on-line external scan. Microsoft seems to understand Windows - particularly Windows 7 - better than Firefox.

Google's new language "Go" (re November 29, 2009 mailbag)

Hi Jerry,

I spent last year working as a Windows programmer which I hadn't done for several years. I got the same sort of shock Rich Heimlich complained of in your November 29, 2009 mailbag: I spent (nearly) all my time learning new and poorly documented libraries and little time doing anything productive.

Over the last few weeks I've been starting to experiment with Google's new "Go" language: it's not ready for prime time yet (not by a long way: no Windows version, for example) but it does seem to be aiming at some of the right targets and avoiding many of the mistakes of C, C++, and Java.

At a minimum it's encouraging to see language evolution that takes account of modern hardware: more cores but not faster ones, for example; encouraging concurrency but doing so without inflicting threads on the poor programmer; pushing more error-prone memory bookkeeping work onto the compiler and runtime now that computers are fast enough are all good things.

It will be interesting to see how the Go language fares as it gets extended and ported to more existing environments: in particular native GUIs on Windows, OS X, and Linux are going to be as challenging as ever, and the size of the language and associated libraries will obviously grow.

The main web page is here:

A good technical introduction by Rob Pike (of Unix fame) (one hour, very fast paced) is available on YouTube

Excerpting from the FAQ for why you may be interested:

| Why are you creating a new language? | | Go was born out of frustration with existing languages and | environments for systems programming. Programming had become | too difficult and the choice of languages was partly to | blame. One had to choose either efficient compilation, | efficient execution, or ease of programming; all three were | not available in the same mainstream language. Programmers | who could were choosing ease over safety and efficiency by | moving to dynamically typed languages such as Python and | JavaScript rather than C++ or, to a lesser extent, Java. | | Go is an attempt to combine the ease of programming of an | interpreted, dynamically typed language with the efficiency | and safety of a statically typed, compiled language. It also | aims to be modern, with support for networked and multicore | computing. Finally, it is intended to be fast: it should | take at most a few seconds to build a large executable on a | single computer. To meet these goals required addressing a | number of linguistic issues: an expressive but lightweight | type system; concurrency and garbage collection; rigid | dependency specification; and so on. These cannot be | addressed well by libraries or tools; a new language was | called for.Best regards,

Giles Lean

Laudable goals. I can only comment from a distance, since most of my programming experience was in Pascal and Modula 2, with some practical work in Compiled BASIC (the Pascal-like version that let you turn on a number of restrictions like required declarations). In my view a properly structured program combined with a language that does strong type and range checking and strict syntax results in far less programming time over-all: the initial time to get it to compile is a lot longer, but there's a lot less debugging time, and if the program compiles at all it generally does what you expected it to do. The strongly structured languages I prefer pretty well lost out to the C variants, although there are still places that use the older languages to advantage.

I can only watch the new language wars with some interest. The goal of the computer revolution is, for me, to get to the point where it is more important to think of something for the computer to do than to learn how to teach it to do it: which is to say, something close to natural language programming. We're a long way from that. Indeed, programming theorist Edsger Dijkstra thinks it's more difficult than I believe it is. His essay on that is well worth your time.

As to interpreted languages for quick and dirty work like filtering files, Python works on both Windows and the Mac OS, and it's already installed on your Mac OS X. Python plus Learning Python from O'Reilly are tools most of those interested in programming should have. There are also many free introductions to Python, as well as older editions of the O'Reilly book available at low costs.

I was looking for advice on what phone to get for Roberta. Rich Heimlich recommends the Droid as the iPhone for Iphone haters. Asked to expand on that:

See my blog entries for details. It's a LONG list of things.

http://blog.pcserenity.com/2009/11/i-join-touchscreen-revolution.html

http://blog.pcserenity.com/2009/11/droid-physically-speaking.html

http://blog.pcserenity.com/2009/11/droid-does.htmlI DESPISE iTunes so I agree with Eric. Not having it is absolutely a feature. Amazon support is already there via an included app called Amazon MP3. However, I don't buy online music so I really don't care about that. The only music I care about is full CD-quality and now that Music Giants has gone under I have no interest until something similar returns. As far as getting videos and such there are many solutions for that though I don't really do video on my phone even though it does it rather decently.

The biggest hurdle, I suspect, for most people would be a) going with Verizon if you're not with them as they are sort of the Microsoft of the mobile world (or maybe Comcast), b) being comfortable with essentially giving up any concerns with Google and just going with their path. The latter was my big issue as I was already with Verizon. Google's solutions aren't fully polished yet but what's here is either workable, full of amazing promise or flat-out awesome.

Being able to share calendars between family members and friends on my phone is a new eye-opener. Being able to get reviews instantly by scanning a barcode while shopping (and getting price quotes) is great. The navigation stuff is incredible. Being able to text while driving with ease because I can have my text messages spoken to me and reply by speaking. It's not 100% and never will be but I find it good enough. My desktop, for the first time in my life, feels barren and useless because it now never has e-mail waiting for me. I get 99% of it on the phone and, much to my surprise, that's a-okay.

Android Market isn't iTunes or even remotely close to it but it's just fine for my needs. I already have over 40 apps on the phone in just a couple weeks of use. I stream my AM radio talk shows, get great Pandora, the audio quality is better than any phone on the market, my voicemail is transcribed for me and my cell uses Google Voice entirely for all voicemail giving me free Visual Voicemail and other great features like custom greetings for different callers, etc. I've given up Trillian because Gmail's plugins for Google Talk and AIM are both good enough and cover 90% of the users I interact with. I can customize the phone endlessly which I always appreciate.

I believe the success of the Droid will also drive incredible evolution into Android Market. It got to where it is (a 10th of the size of iTunes apps) on the power of one okay phone from a secondary provider over years. Now it's going to flourish.

I should mention that there are several things that I don't like about the software but have faith Google will get around to fixing. For example, Gmail's insistence on not supporting folders. It's refusal to support RSS. Their contact manager can't sort by last name first so if you want that you have to enter people that way. Some of the phone apps are well behind what the mobile web versions can do so, for example, I have a Gmail app and a Gmail Mobile link. I ASSUME the latter uses more battery power as it has to drive more online data through but maybe it's inconsequential. But hey, the first time I connected my contacts and it went out and brought in ALL my Facebook friends with all their contact info AND their pictures to use as contact pictures and I can easily text them, IM them, call them at home, work or cell or post to their Facebook page, that got me realizing that it's all just a matter of time for Google.

One other one that just BLEW ME AWAY:

I got a call from my wife. I forgot a doctor appointment. I didn't know where it was. I bring up Google Maps app. I speak, "Navigate to Doctor E in Haddonfield". I said nothing about a street or a state. It pulls up an entry and shows it to you. Yep, that's the one.

It then does the route. I can then add Satellite view and live traffic (which is free here and costs on all the other GPS providers). Once I get there the map switches to Street View and turns to face the building showing me exactly where I'm supposed to be. "Oh, yeah, the big red brick building. Excellent."

Another shocker came when I used an app called Where. I decided I would try a new pizza place it recommended. I clicked a setting noting I'd be going. Two amazing things happened. First, a friend saw it and stopped by. Second, someone I don't know posted a "social wall note" telling me to ask for the "free dessert" and sent along a coupon for a free entree that showed up on my phone. So I showed the phone to the waitress. She took it up to the front, scanned it and came back. Free entree. I mention a free dessert and she brings us out a group of cinnamon dough "knots" that were fantastic.

Frankly the whole experience scares me just a little but in a "good" way?

Rich Heimlich

All of which is tempting. I don't hate the iPhone, in fact I like mine a lot, but I really dislike the bondage to AT&T. AT&T has essentially zero coverage at my house, at Niven's house, at my son Alex's house, and most places I tend to use the iPhone a lot. The Wi-Fi works fine if I'm somewhere there is Wi-Fi access, and I will grudgingly admit that I'm often in such places and the iPhone has been very useful; but at home, neither AT&T nor Wi-Fi works well in some parts of the house. That's of course my fault, and I need to install another D-Link Wireless Router in the back part of the house so I'll have some coverage everywhere.

I used to have a telephone service booster that added a couple of bars to my wireless telephone signal strength, but the unit died and the company that made it seems to have vanished. Alas. I should look into finding another, since AT&T doesn't look like restoring the service I used to get before they bought our Cingular. Cingular worked fine here: I presume AT&T closed some relay towers when they took over. If it sounds like I don't have a very high opinion of the modern AT&T your inference is correct...

I can get Droid with Verizon. Verizon users tell me they get good signal strength here at Chaos Manor...

In any event, I am pleased that competition is driving the pocket computer business. I hope Droid and the iPhone remain at each others' throats for years. And just maybe the map advertisements will shame AT&T into having at least as good coverage as Cingular used to have before they were absorbed into the Borg.

Last month I mentioned using my Plantronics DSP headset for use in conferences such as Twit.

: Plantronics DSP-500 unavailable from Amazon and appears to be discontinued

I clicked on the link for the Plantronics in the international column draft you sent and Amazon shows that this model is unavailable. I went to Newegg and they show it as discontinued. Amazon does carry the DSP-400, but I don't know how it compares.

--

Richard Samuels

I get the same message from Amazon, and I am astonished. I would presume Plantronics will come up with a new DSP headset soon enough. My DSP 500 works so well I never think about it, and certainly haven't thought about a replacement. I'll let my readers know when the new ones come out; I've certainly been happy for years with Plantronics, both for comfort and for sound quality. I have a new Plantronics Bluetooth I'll be reporting on in the column.

I sent a copy to Plantronics, and Plantronics Spokesman Dan Race adds:

Thanks for your note. You're right that Plantronics will be announcing a new lineup of Audio products very soon. CES is right around the corner. You can inform your reader that he/she will see the new products online and in-store in early January.

Cheers,

DanDan Race

Corporate Communications

Plantronics, Inc.

Thanks!

A comment on the recent Microsoft Professional Developers Conference

Microsoft PDC

Jerry,

I am very concerned about Microsoft's Application Development direction presented at PDC. As with .NET this appears to be yet another attempt by Microsoft to "Lock In" development to the Windows environment. What is really needed is cross platform development tools so that applications are able to be deployed on platforms that are appropriate for the requirements of each individual user. Contrary to the claims of Microsoft Windows is simply not appropriate for all users.

Bob Holmes

Well, perhaps; but I was greatly impressed with what Microsoft showed in application development tools usable by people with business experience but no training as programmers. What I saw was that a team of two, a business expert and a programming generalist familiar with the new Microsoft tools, could do some very complex programming in less than a month [I'm guessing here, of course], and some big revisions to that in days [they demonstrated that. Rehearsed demonstration, of course]. The linkages and modeling capabilities impressed me greatly.

Now of course there were bugs and glitches; this was a PDC and the software presented was mostly beta; but in my experience the wheels of Microsoft may grind slowly, but they grind exceeding fine: It will work pretty well by the time it's released. There was a time when Microsoft product releases were really beta releases, and everyone knew not to install a major Microsoft product until after Service Pack One; but now they've learned to call them beta releases. Microsoft waits for error reports and does considerable debugging before the formal release. I expect great things to come from what I saw at PDF.

I agree that cross platform tools are an ideal to hope for, but in the real world most development is done by people paid to do the developments. Not all, of course, but most. Microsoft has put together some good teams and now that Google (with the aid of the cloud) is giving them competition they'll get better. Windows 7 is already good enough that it's a matter of preference rather than necessity in choosing between Apple and Microsoft for an operating system. Competition is improving Apple, too.

Now that Google is out there to compete with both Microsoft and Apple, I expect big advances in the computer revolution. They'll start at Developer level, but it won't be long before they filter down to the rest of us. Hurrah.

System Programmer Phil Tharp recently wrote me about his newest network server system. I asked him to expand on that for my readers. Incidentally, Phil is an Apple enthusiast for personal computer work.

Re: neat feature of the new heavily power managed Xeon's

Recently, I needed to build a new network for the lab.

Like all networks that connect to the Internet, I needed a network edge device. In the small to medium sized world I work in, that usually is a single box the provides a firewall, dhcp server, dns server, web server, file server, and VPN. Most of us are familiar with the plastic all in one boxes sold by D-Link, Linksys, and others which also include a wireless base station. The plastic boxes are not powerful enough to keep up with the higher tier services provided by Comcast in the Bay Area. This is currently 50 megabits/sec down and 10 megabits /sec up.

Further, most of the plastic boxes don't provide inbound VPN. With inbound VPN, I can securely connect to my local network from anywhere on the Internet. Secure Shell (SSH) service is also not usually provided. SSH is very useful if you are running an SVN server and need to just access the server. No VPN required.

In the past, I have used mini-ITX motherboards from VIA with two network interfaces. However, I am now in a funded startup, and felt we needed something a little more commercial and readily available. Enter the Dell Power Edge T310 server.

I had just purchased an Apple Xserve for the princely sum of 7,000.00 and I though perhaps I should check out the mainstream world. I knew from visiting my wife's lab at Intel that they use HP. I also knew other folks were happy with Dell. HP's line jumps over 1000.00 to get two NIC's. In the case of Dell it turns out a single chip, 4 core Xeon 3400 system based on the Nehalem architecture with 4GB RAM, 250GB hard disk, and the all important 2 GigE NIC's was 750.00 delivered.

The machine showed up in 3 business days. I had ClearOS (based on Centos 5.4) up and running in 2 hours fully configured as a gateway with all the above mentioned services running. Performance was excellent. Build quality of the hardware was excellent. It's not Apple industrial design, but it is very well done.

The Nehalem architecture CPU's incorporate aggressive power management. So much so, that if only one or two of the 4 cores are running, they are given significant clock boosts. When little is going on, the overall CPU conserves power. So much so that the fan noise changes very noticeably.

Turns out there is a significant benefit to this. The T310 sits in my lab in a corner. If the load on the CPU increases, I can hear the fans speed up. Over the last two days I have been able to detect when someone is trying to hack in from the outside based on fan speed. As a result, I have been able to fin tune ClearOS to avoid hacking attempts. Nice side effect.

Phil

Thanks. It's always good to hear from people who use these systems and find out what they rely on. I recall when I managed political campaigns [1967 - 73] our telephone switchboard systems were a significant part of campaign headquarters costs. Now a relatively inexpensive machine does far more than a PBX with or without receptionist.

We have previously mentioned the Roku digital player.

TWiT on the Roku device

Dr. Pournelle,

The Roku devices just got upgraded to add about twenty new channels, one of which is TWiT TV. Your episode was the current one when I upgraded, and everyone looks great on TV. The feed wasn't quite as speedy as Netflix, but there wasn't too much hesitation, and it was fun to watch that way.

--Milton Pope

Windows delayed write and Windows 7

Dr. Pournelle,

I read on your site recently that you back up to an external USB drive.

Are you no longer concerned with the Windows delayed write problem? Have you gotten around this problem? And, do you know if it still persists in Windows 7?

Marty Stephens

I have not seen the Windows "delayed write" problem in more than a year, and had actually forgotten about it. It used to be that using USB connections to copy large blocks of files was a bad idea: better to break up the copy process into several smaller blocks, lest the "delayed write" error clobber the whole copy process.

When terabyte drives came out I was concerned that the "delayed write" error would get worse, but actually before that time it simply stopped happening. My cursory research indicates that it hasn't been a problem for a couple of years; I certainly don't recall when I last got that error, and I've been copying files off to terabyte USB drives for more than a year.

This Should Get Clippy Working On Windows 7 For You

Jerry,

There is a hotfix for Windows 7 that installs the agent components. You may have to run a repair install of Office 2003 after you apply the hotfix to get the Office Assistant to work but I would try it with just the hotfix first.

For the few who may not know, Clippy was a feature in Office through version Office 2003; he was an animated paper clip who helped in searches and made often clueless suggestions. I may be the last person on Earth to like Clippy.

Clippy doesn't exist in Office 2007 and beyond, and I'm upgrading most of my systems to 2007: I find that my major complaint, that enormous space-eating ribbon in Word, is tamable with control-F1. With the ribbon toggled out I have my desk space back, and I find that a week of frustration unlearning old habits and learning new ones has let me appreciate the new command organization in Word 2007. Meanwhile, Outlook 2007 really is a significant improvement over 2003.

The result is that Clippy only exists on my IBM t42p ThinkPad, (Word 2003 on XP) and alas, he's got old enough to need replacing. The replacement will get Office 2008 along with Windows 7, leaving FrontPage 2003 as the only program I'll be using that still could have Clippy, and I don't miss him there. So, I fear, it's goodbye Clippy. Most won't mourn him, and I suspect I'll survive. I will miss him when I think of him but I expect that won't be often.

open files...

Dear Mr Pournelle,

I just read your latest "Computing at Chaos Manor" column (Dec, 7th) (I used to be a Byte reader a very long time ago...).

Just two points...

1. Using Process Explorer (used to be on www.sysinternals.com but now it is Microsoft...) you can Ctrl-F for any file/dll using full name or just part of the path and it will tell you what processes are using it... I find it very useful.

2. I too used to use Norton Commander... now I switched to Total Commander (link) that is a full 32-bit replacement... key shortcuts work the same as in NC and there is some "windows-only" improvement you may like.

Best regards,

Antonio Romeo, Rome

I have tried Total Commander, and thanks. It works, but I remain used to Windows Commander, which also still works and my fingertips know its commands at the cellular level. They work on Total Commander, but the appearance is different, and I sort of gave up on it for no particular reason until composing this reply. Then I had another look.

You are right, there are some improvements. And I do like it. Thanks. I do wonder why Windows gave up supplying a file manager; perhaps Commander was so useful? But then why did Commander vanish? I don't understand users sometimes.

Perfect E-reader

Jerry:

I have spent quite a lot of time seeking the "perfect ereader" (for my tastes). I think I have finally succeeded. The ASUS EEE-PC Tablet Netbook (T91MT) is fantastic. Running Windows 7, the whole device is the size of a trade paperback, but smaller than the typical hardcover. I have installed FBReader, Kindle for PC, Microsoft Reader, and a couple of others. With Adobe installed, and Windows native NotePad, I'm ready to read any format from anywhere (provided that reader software exists that runs under Windows). A bit of customization to Windows 7 widening the scroll bars and upsizing some buttons makes the T91MT "finger friendly". I had previously tried a Toshiba Tablet PC, and the need to use the stylus instead of fingers was a MAJOR drawback. Plus the fact that the Toshiba was just too darned big.

HIGHLY recommended.

Duane

Alas, it is listed as no longer available but there is one like it. The ASUS Eee PC T91MT-PU17-WT Tablet Black Netbook.

For the moment I still prefer Kindle, but not by a lot.

I still believe that eventually everyone will carry one electronic item that combines tablet/notebook, Internet browser, camera, telephone and videophone, and pocket computer. I wouldn't mind having to carry a small carry bag if I could get that - and of course if there were universal Wi-Fi availability. We're not there, but we've come a long way.

I agree that reading on a TabletPC (in my case my elderly HP Compaq is easier than on a Kindle), but of course there is the problem of battery life, and the Compaq is a bit large and heavy for carrying everywhere.

Rumor has it that Steve Jobs finally has an Apple Tablet he likes and we'll see it in January. I can hardly wait. I like Tablets a lot.

On last month's XP annoyance:

An XP Annoyance

Yeah

.Happens all the time.

Many Windows programs create a handle to a file and then don't clean up after themselves when they close. Microsoft's own programs are some of the worst offenders.

Process Explorer (link) provides a way to search for open handles and force them to close. Since Microsoft bought this program, it is a thousand wonders that they haven't incorporated it into Windows.

Also useful in this regard is What's Running (link) which is the quickest way to kill any running process. In fact, I usually have this program running all the time, just in case.

--

Dr. Paul J. Camp

Spelman College

Thanks! What's Running is interesting.

Ratfor reminder

Ratfor (RATional FORtran) was written in Ratfor and output standard Fortran 66. Nothing else was needed, even in the "Good Old Days", once you had bootstrapped it onto your machine.

This was very much like K&R C and most follow-on languages, not surprising since K was the same Kernighan who developed Ratfor.

Fortran 77 added control structures that made Ratfor much less necessary and Fortran 90 added many scalar operations that could be performed on entire arrays, making many matrix libraries redundant and obsolete.

I'm hardly an expert anymore, but I saw very little Ratfor after Fortran 77 compilers became common.

JED!

I may be the last person left who misses RATFOR. FORTRAN was my first official programming language (other than machine language for the IBM 650. Ugh.) and when I discovered RATFOR would catch my syntax errors quickly and efficiently I loved it. It also forced reasonably sane program structures.

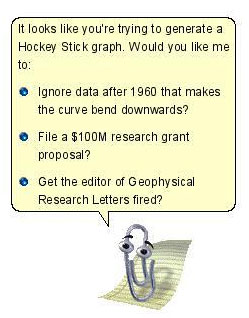

I haven't done a FORTRAN program in two decades, and I doubt I ever will again, but most of the climate programs are done in FORTRAN and someone is going to have to check them. Of course most of the climate modelers are very coy about releasing their source code. (Image courtesy of Casey Research)

PC Security

Dear Jerry,

I was so pleased to see the improvement in your health and condition... I wish you well for the future.

You must get too many 'Why don't you use ...' communications so I will be brief and expand later if necessary.

It seems to me that the emphasis on many forums and discussion columns relating to PCs is centered around security issues. I do not maintain that these are unimportant but surely what is more important is what the computers can do. I recently subscribed to a pretty good site that reviewed and recommended the best free software for Windows. Unfortunately it changed so that by far the most frequently discussed items were those of security - how to protect your PC (and spend ages doing it) not what you use the PC for.

I have a simple solution that I have modified over the years and I get very few security problems that I am aware of.

Now my solution is as follows :-

I use software called BootIt NG to create multiple primary partitions (the software allows this) on a hard drive.

Then I install XP, all the basic drivers and some basic software (freecommander, notepad++ etc..) Then I copy the partition and make 4 or 5 copies. I label them XP work, XP Experimental, XP Games, XP Backup - all of about 15-20 GB I have 2 data partitions depending on the size of the hard drive, one NTFS for video work the other FAT32.

I also create a Linux partition (sometimes 2)

I then create a menu system in BootIt NG that accesses each of these OSs from the menu. Each OS can 'see' only its root directory and either one or two of the data directories.

In use the games OS does not access the Internet (I don't play games that way) and is designed for speed and simplicity (no firewall needed, no virus protection, no complications.)

One OS is my main working OS with firewall (Comodo), Skype etc - I take few risks when browsing the Internet here.

One OS is for experiments, I install software here first to see what it does to the OS, if I don't like it I scrap the partition and copy the basic partition back to re-use as experimental. I would always install Picasa here first to make sure I understood what is was trying to do to my system.

I install Linux Mint - which I use for any Internet work that might have virus capabilities etc, bit torrent etc.. I also use Mint to access media files, it seems to do this flawlessly. I can also use Mint for my work.

I don't remember the last time I had a virus or malware problem (except sometimes while experimenting) in my main systems and I feel comfortable trying out new software on an OS I can easily scrap - copying a 15GP partition takes a few minutes only. I can try out different linux OS if I feel so inclined.

I have three PCs at home, one for work, one for video processing and one the children use to play games and watch you tube (using Linux). My PC at work is set up similarly.

It works well for me, I can't say that it is popular with my colleagues...

I haven't tried Vista (though I have seen PCs with it installed) nor have I tried Windows 7. XP does all of what I need right now.

Well that's my 2 cents worth.. Thank you for your columns over the years (I subscribed to Byte primarily to read your column).

(Interestingly my PCs were recently submerged for some hours under the flood in Manila in September - all the hard drives worked flawlessly afterwards. In fact most of the components worked after drying out - the power supply being the component that failed in all the PCs.)

Regards

Paul Hartley

For all the talk about PC Security, I have had few issues. I was bitten by Melissa (and infected some of those in my mail list until I realized what was happening and pulled the plug on my machine so it stopped sending the virus to everyone whose address it could find) but that was over a decade ago, and I have had no problems since.

Roberta got an infection from a fraudulently placed pop-up ad on the New York Times website. She thought it was safe, and then when the "Times" invited her to download a defense against malware she tried to exit the offer by clicking its red x, and that got her. I found it easier to replace her machine than scrub it - but then it was long past time I updated her system anyway.

A virus filter apparently wouldn't have helped prevent that particular infection anyway. My fault: I should have warned her about what to do when you get a suspicious offer. Clicking anywhere on the malware window will have the effect of accepting the "offer"; the little red close X takes you to the same place that any other part of the malware window does. What she should have done was close the Internet Explorer window, or even reset the machine. A well informed user remains the most effective protection device.

The upshot is that I find Windows 7 with Windows Security Essentials works well enough. I get a lot of work done and don't have to think too much about security, despite getting tons of mail including huge quantities of what I assume are infected attachments. Since I never open them I don't care much; every now and then an external scan will tell me that a bunch of attachments I haven't got around to erasing yet are infected, but since I never open them they have done me no harm. I just erase them. If something were scanning them as they came in it would use up a lot of time to no real purpose.

I liked XP a lot more than the early versions of Vista, but modern updated Vista is not bad. I prefer Windows 7 to both, but it's no longer mandatory to replace the latest Vista with Windows 7.

Computing At Chaos Manor: The Mailbag

Mr. Pournelle:

Re the November 29 "Computing At Chaos Manor: The Mailbag": you quote a complaint by a "Jim Potter" complaining about a failure by Firefox to honour what he called the "html mailto tag".

The code quoted is:

<a href="mailto:name@isp.net" <mailto:name@isp.net> > name@isp-life.net</a <mailto:name@isp-life.net> >It likely did not work because it is not valid HTML. The complaint says that this code works with Internet Explorer, but this is hardly surprising, since Internet Explorer is notoriously forgiving of coding errors. It is very common for people to complain that pages work with Internet Explorer, but not with Firefox or other browsers, and when this happens the problems are almost invariably caused by the use of invalid code in the web pages.

There is a program called an "HTML validator" which can be used to identify HTML syntax errors, and a free validation service is available at http://validator.w3.org/ . Someone who makes a web page should validate their page using both HTML and CSS validators before they do anything else, because validators can report many errors which cause broken web pages.

Mr. Potter's code could instead be the much simpler (and valid):

<a href="mailto:name@isp-life.net">name@isp-life.net</a>[] Regards, Chuck Upsdell

Thanks

Computer languages and complexity

I think you and your commentators miss the point. Yes, the example your correspondent lists for parsing an RSS feed isn't simple, but it's doing a hell of a lot. Compare this to writing a routine to parse the raw XML as a string of text. Those five lines of code rest of the shoulders of maybe a dozen or so prepackaged libraries - and that's not a bad thing.

Code generation, for the most part, utterly failed. What we have instead are increasingly abstract layers of human generated code - which to my mind is much more powerful in terms of developer productivity. When I code in Flex (a language that makes it easy to write Flash based applications), a single line of my code might trigger tens of thousands of lines of other people's code - at all layers of the stack - in the flash player, in the browser, in the OS, in the server I am talking to.

Every generation of new technology has increased my productivity exponentially as I've moved higher up the abstraction stack. I don't have to code raw http requests, I don't have to parse xml text, I don't have to write a linked list or a hash table, I don't have to write sort routines, I don't have to draw my own visual widgets with hand written code, I don't have to worry about memory allocation and management, I don't have to worry about installation routines, I don't have to understand the PDF file format to write a PDF, I don't have to write and read files bit by bit, I don't have to worry about the byte order of my CPU.

Increasingly when I code I can just focus on the problem I am trying to solve, and its unique requirements, those areas for which nobody has yet anticipated a need for a prepackaged library. Every year those uncovered areas grow smaller.

--

Joshua Vanderberg

Thanks